Towards a European Civil Liability Regime Adapted to Artificial Intelligence: In Favour of an Autonomous, Strict and Harmonised Model

The European Parliament study PE 776.426, authored by Professor Andrea Bertolini and delivered in July 2025 to the JURI Committee, reflects a radical transformation in the European legal approach to civil liability in the context of artificial intelligence systems (AIS). What was originally conceived as a liability-centred framework, oriented towards ex post redress and deterrence, has been progressively supplanted by a model of ex ante compliance-based governance, now embodied in Regulation (EU) 2024/1689 laying down harmonised rules on artificial intelligence (AI Act). This shift has occurred at the expense of the previously envisaged harmonisation of civil liability rules.

The announced withdrawal of the proposed AI Liability Directive (AILD) by the European Commission in its 2025 Work Programme illustrates the abandonment of a liability-first strategy in favour of a more fragmented and risk-based regulatory paradigm.

“Soft-law elements originally conceived as auxiliary have become the core of EU hard-law intervention, while the envisioned liability regime has been indefinitely postponed” (p. 22).

Although recently revised in October 2024, the Product Liability Directive, now Directive (EU) 2024/2853, remains fundamentally unsuited to address the legal challenges posed by AI. While the PLDr includes software within the notion of “product” and introduces procedural innovations (disclosure obligations, presumptions), it still relies on the classical notion of “defect”, which is ill-suited to autonomous and evolving systems.

The study identifies several key shortcomings:

“The PLDr leaves intact the substantive limitations that rendered the PLD largely ineffective in the first place” (p. 11).

The Proposal for a Directive on adapting non-contractual civil liability rules to artificial intelligence (COM(2022) 496 final) sought to introduce procedural harmonisation tools, notably rebuttable presumptions of fault and causation. However, it remains embedded within national fault-based systems and introduces concepts (such as “objective fault”) that conflict with existing domestic doctrines.

According to the study:

“Its adoption is unlikely to prevent the proliferation of alternative regulatory paradigms at Member State level” (p. 12).

At the core of the study’s recommendation lies the adoption of an autonomous and strict liability regime, specifically tailored for high-risk AI systems (h-AIS), as defined under the AI Act. This approach, inspired by the 2020 European Parliament resolution (RLAI) and the findings of the Expert Group on Liability and New Technologies, is grounded in efficiency, clarity, and legal certainty.

The study advocates for liability to be imposed on a single operator, the entity who exercises control over the functioning of the AIS and derives economic benefit from it. This functional criterion replaces complex causal analysis and circumvents multi-party liability issues.

“Liability should be imposed on the single entity that controls the AI system and economically benefits from its deployment” (p. 12).

For consistency across the EU legal framework, the notion of “operator” should correspond to the provider and/or deployer as defined under Article 3 of the AI Act.

This alignment facilitates a streamlined regulatory ecosystem and strengthens internal coherence.

A strict liability model:

“A truly strict liability rule […] enhances clarity and foreseeability of outcomes” (p. 102).

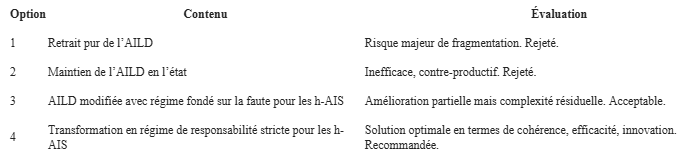

The study evaluates four legislative options, each assessed against the criteria of legal coherence, efficiency, and innovation impact:

“While Option 4 is the optimal solution, Option 3 could still represent a commendable improvement over the status quo” (p. 13).

The current trajectory of European AI regulation risks entrenching national divergences and regulatory incoherence. The withdrawal of the AILD without an alternative would leave a regulatory vacuum in a domain where legal certainty is essential. Conversely, the adoption of an autonomous, strict liability regime for high-risk AI systems would provide a coherent, effective, and innovation-friendly legal framework — consistent with the Union’s internal market objectives and the principles of technological accountability.

The study PE 776.426 constitutes a compelling doctrinal and policy-based argument in favour of a functional, risk-oriented and economically efficient model of AI liability, anchored in objective responsibility and regulatory clarity. In the face of emerging technological risks, the law must not remain merely adaptive, but must proactively structure accountability within the European digital ecosystem.